Hiep Nguyen

October 16, 2024

•

30 min read

Generative AI is part of Machine Learning (ML). ML means training a machine with large amounts of data so it can recognize patterns and predict new data.

Since 2020, tools like ChatGPT have transformed industries. By 2024, nearly every application uses AI, improving products and boosting productivity beyond manual processes. Businesses benefit the most, enhancing both products and internal workflows, with IT teams leading the implementation.

As AI becomes widespread, people develop different pre-trained models for different purpose like text generation, video, audio, image generation, we can reuse and fine-tune them further with our own data, and they’ll use large language models to create content based on input - That’s Generative AI.

Personally, I think there’s already plenty of information on generative AI use cases. You likely use some of them daily and are aware of the risks and limitations of overusing AI. I won’t dive into those details here to keep things brief.

A foundation model is a large machine learning model trained on vast amounts of data, typically using unsupervised learning, that can be adapted to a wide range of tasks. These models are called “foundational” because they serve as a base for many specific applications after being fine-tuned with smaller, task-specific datasets.

Numerous models are available, such as GPT for text generation or DALL·E for image creation. Selecting the right model depends on your use case and the content you want to generate. Evaluate each model’s capabilities and pick one that aligns with your goals.

A general-purpose model is capable of generating human-like text, answering general knowledge questions, and performing simple tasks such as summarization. However, it often lacks deep expertise in specific domains. To improve its performance and relevance in particular contexts, we can fine-tune the model using a narrower dataset that focuses on domain-specific information. This approach allows the model to provide faster and more relevant responses tailored to business needs.

For example, when you ask a developer about a function, they typically respond with a broad explanation that applies to programming in general. Only when you specify that you want information related to JavaScript will they provide a more focused and detailed answer specific to that language.

By fine-tuning the model with relevant data, we enhance its ability to respond accurately and efficiently in specialized contexts, making it more useful for specific business applications.

Prompt Engineering is a unique process that does not require labeled data or significant computational power to train a pre-trained model. Instead, by leveraging the model’s ability to understand natural language, we can guide a large language model (LLM) through a series of questions and instructions. This allows us to adjust its behavior so that it produces the desired and relevant output.

Since the quality of the output is entirely dependent on the input prompt, it is essential for us to understand the capabilities and characteristics of the LLM. By doing so, we can craft high-quality prompts that achieve the best results.

A well-structured prompt consists of four key components:

To create a better prompt, we must ensure that it is clear and concise. The context should be fully provided, and we should clearly state the desired output format. For complex tasks, it is often beneficial to break the task down into multiple smaller tasks. This approach helps the LLM gain a better understanding of what it needs to do, reducing the likelihood of confusion.

Above all, we need to experiment with and research the characteristics of the LLM. Understanding what drives the model will help us formulate prompts that yield better results.

Now that we’ve got a good overview of what Generative AI is, let’s take a closer look at how different industries are actually using it in their applications. We’ll check out how real-world businesses are applying it to their application. This will give us a clearer picture of the real impact Generative AI is having and the ways it’s changing the game in various fields.

As we discussed, generative AI has become an essential part of every application today. People are finding ways to make it more accessible. AWS provides this accessibility to generative AI through Amazon Bedrock.

At a high level, Amazon Bedrock gives us access to the most popular foundation models. It also enables us to build, test, deploy, and scale these models for our own use cases, all with security guarantees. Additionally, it integrates easily with other AWS services, such as:

Another benefit of Amazon Bedrock is it’s serverless. This means there’s no need for server provisioning or management. We discussed this in the Retool Serverless Inventory Management series before.

As we discussed in the previous section, the foundation model serves as the heart of the application. Everything is built around it, leveraging its ability to process and generate new content. This model is trained on a large and diverse dataset, enabling it to perform a wide variety of tasks effectively.

The model’s performance on a specific task depends on several factors: the type of data it was trained on, the amount of data used, and the intended purpose of the model. Depending on these aspects, the model may excel at some tasks while struggling with others.

In the context of AWS, the foundation model is hosted and managed by AWS, which provides us access to it through an API. This infrastructure allows developers to easily integrate the model’s capabilities into their applications, making it a powerful tool for content generation and other tasks.

In our case, we have three main parameters that play a crucial role: Temperature, Top P, and Top K. While I don’t fully grasp all the intricacies of these parameters yet, I will do my best to explain them in a straightforward manner that is easy for both you and me to understand.

To generate a complete output, the model creates each token sequentially, one after the other. The three parameters mentioned above help the model decide which token to select at each step.

Let’s begin by discussing Temperature. This parameter controls how random or creative the selection of tokens will be. The default value for Temperature is set at 1. When the Temperature is lower than 1, the model tends to choose words that are more predictable and common. In other words, it focuses on selecting safer options that are likely to fit well in the sentence. On the other hand, if we increase the Temperature above 1, the model becomes more adventurous. It begins to consider a broader range of words, including those that are less common or more unusual. As a result, the model will make more random choices, introducing creativity into the output.

Once the model has generated a pool of chosen words based on the Temperature setting, the next step is to determine which word to use from this list. This is where the parameters Top P and Top K come into play.

Let’s start with Top K. This parameter is quite simple to understand. If we set Top K to 10, the model will only consider the top 10 most likely words from the generated pool and will select one from this limited set. This means that the model focuses on a specific range of high-probability options, making it less likely to choose obscure or unexpected words.

Now, let’s move on to Top P. This parameter works in a somewhat similar way to Top K, but with a key difference. Instead of specifying a fixed number of options to consider, Top P focuses on the cumulative probability of the chosen words. When we set a Top P value, the model selects tokens until the sum of their probabilities equals this value. For instance, if we set Top P to 0.9, the model will choose from the most likely words until the total probability of those words reaches 90%. This approach allows for more flexibility, as it can include a varying number of tokens based on their individual probabilities rather than sticking to a strict count.

Response length sets minimum and maximum token counts. It sets a hard limit on response size. The following table lists minimum, maximum, and default values for the response length parameter.

A token is a unit of text that the model processes, usually contains 4-6 text characters. Why we need token?

Usually, a LLM have a short term memory to hold the context of the conversation in a session. This make the interaction between user and AI more meaningful cause the AI can reference back to previous interaction and provide the next response more relevant. But because a LLM itself is not a data storage, it jobs is to process and generate data, so short term of its usually limited (depend on the model), can only remember a limited number of token and all they gone after session ended.

To implement some kind of long term memory and persist between multiple session to improve and personalized the interaction with user, we need a external storage for it.

A model is built for general purposes; it doesn’t know your business data. However, we can include that data as context in a prompt (as discussed in the section about different parts of a prompt). Each time we want the model to answer a question using business data, we provide the data in the prompt. But how can we make this easier? We have a framework to automate the process: for every input, it automatically retrieves relevant data from storage, combines it with the input, and gives it to the model.

Before we get into this, let’s briefly introduce vectorized data.

As a business, we usually work with specialized data from our domain, which can come in various types: images, text, audio, video, etc. However, machines don’t inherently understand these formats. They process numbers more efficiently. So, we convert these diverse data types into a common format that can be processed.

Researchers found that multi-dimensional vectors are the best way to represent this data. Although it’s somewhat mathematical, the core idea is simple: each data point becomes a point in space. After transformation—called embedding—similar points naturally cluster together, while dissimilar ones stay apart.

This image helps grasp the idea: data is represented in multiple dimensions (possibly 300), not just the three we typically imagine.

To embed data, we use specialized machine learning models like Amazon Titan Embed Text V1 for text and Amazon Titan Embed Image V1 for images.

Once our data is embedded in vector space, we can perform calculations such as:

Now that we’ve found a way to represent different data types in a machine-friendly form, we need to store and retrieve them efficiently. This is where vector databases come into play. The query operators in these databases work by finding items similar to the input vector—so yes, the input must be embedded too.

This is an image that can help you have an overview what it is look like with and without RAG.

So we know what RAG mean. Then knew that, oh the process of collect, processing, embedding our data and store it in the vector, this need complex custom pipeline and it’s hard. We have data store on different place, with different format (PDF, txt,…). And it’s not only about the pipeline, it also about how to scale it and maintain different component of it. Of course Amazon knew that challenge, so they release Knowledge base which is “RAG-as-a-service”, it will handle ingestion, embedding, querying, vector stores (we created or Amazon create one for us on OpenSearch) for us and includes source of retrieve information so we can check original sources to verify the accuracy.

Usually we use Amazon S3 as data source, however we have another as supported like crawl a webpage, SaleForces,…

Read more about crawl a webpage: https://docs.aws.amazon.com/bedrock/latest/userguide/webcrawl-data-source-connector.html

The output of a generative AI model is heavily influenced by the input prompt. Because of this, some individuals may attempt to manipulate the output by providing prompts that are unrelated to our domain. They might use the model as a free generative AI service to serve their own purposes or trick the model into revealing private or sensitive information meant for another user.

It is crucial to be aware of these security concerns and take appropriate measures to ensure that the model is used responsibly and ethically.

How can we prevent the misuse and exploitation of Generative AI, ensuring that both harmful user inputs and unsafe AI responses are blocked?

While the built-in guardrails of foundation models offer some protection, AWS takes it further by providing Amazon Bedrock Guardrails, which can block up to 85% more harmful content. These additional safeguards help ensure safer interactions by filtering out inappropriate or harmful content, making the AI’s responses more reliable and secure.

https://aws.amazon.com/bedrock/guardrails/

Amazon Bedrock Guardrails allow us to define the following:

LLMs (Large Language Models) generate intelligent responses based on their training. They answer questions and produce text, but they cannot take actions or interact with the world in real time. For example, an AI assistant might give you information about ride-sharing services, but it can’t book a ride for you. LLMs rely on the static knowledge built into them during training. Even with updates, the data stays fixed and might not reflect real-time changes. LLMs only generate responses; they can’t perform actions.

To extend AI’s abilities beyond answering questions, we use an ‘Agent.’ An AI Agent lets the LLM take action in the real world. It gives the AI a “body” to interact with systems or environments. The LLM serves as its “brain,” reasoning and deciding what actions to take to complete tasks.

To create an Agent in Amazon Bedrock, the following key inputs are required:

Once the Agent is created, there is an option to enrich its capabilities by integrating a Knowledge Base, allowing it to access domain-specific information and provide more informed responses.

After understanding all the components and the workflow of building a business-specific AI model with Retrieval-Augmented Generation (RAG), we realize the need for a framework that makes this process faster and easier. As more components and workflows are required to fully leverage the power of AI models, LangChain becomes essential.

LangChain helps us:

A chain in LangChain is a series of interconnected components that work together to process input and generate output. Each component performs a specific function, such as transforming input, calling language models, or storing results.

Chains break down complex tasks into smaller, manageable parts, making it easier to build applications. This modular approach allows developers to combine different functionalities and streamline workflows. Depending on the workflow, we can use different types of chains to achieve the desired result, such as sequential chains, branching chains, or memory chains.

In conclusion, Generative AI and AWS Bedrock offer powerful tools for businesses to leverage artificial intelligence in their applications. By understanding and implementing concepts like Retrieval Augmented Generation (RAG), Knowledge Bases, Guardrails, and Agents, organizations can create more intelligent, secure, and context-aware AI solutions.

By embracing these technologies and best practices, companies can stay at the forefront of the AI revolution, delivering more personalized, efficient, and effective services to their users while maintaining the highest standards of security and ethical AI use.

Ready to bring your dashboard vision to life? Contact Retoolers today, and let us help you create a powerful, intuitive dashboard that meets your exact requirements.

Looking to supercharge your operations? We’re masters in Retool and experts at building internal tools, dashboards, admin panels, and portals that scale with your business. Let’s turn your ideas into powerful tools that drive real impact.

Curious how we’ve done it for others? Explore our Use Cases to see real-world examples, or check out Our Work to discover how we’ve helped teams like yours streamline operations and unlock growth.

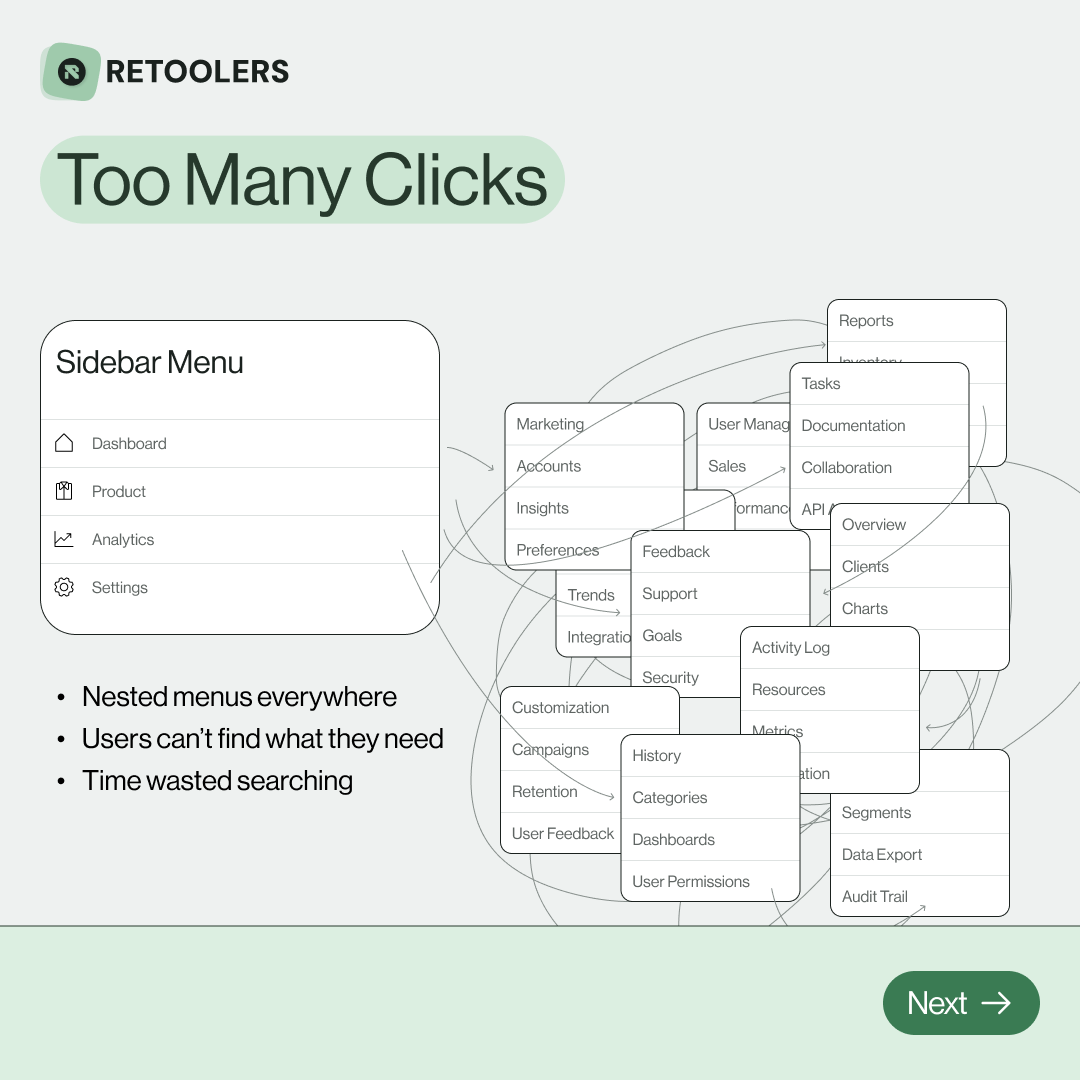

🔎 Internal tools often fail because of one simple thing: Navigation.

Too many clicks, buried menus, lost users.

We broke it down in this 4-slide carousel:

1️⃣ The problem (too many clicks)

2️⃣ The fix (clear navigation structure)

3️⃣ The Retool advantage (drag-and-drop layouts)

4️⃣ The impact (happier teams)

💡 With Retool, you can design internal tools that are easy to use, fast to build, and simple to maintain.

👉 Swipe through the carousel and see how better UX = better productivity.

📞 Ready to streamline your tools? Book a call with us at Retoolers.

🚀From idea → app in minutesBuilding internal tools used to take weeks.

Now, with AI App Generation in Retool, you can describe what you want in plain English and let AI do the heavy lifting.

At Retoolers, we help teams move faster by combining AI + Retool to create tools that actually fit their workflows.

👉 Check out our blog for the full breakdown: https://lnkd.in/gMAiqy9F

As part of our process, you’ll receive a FREE business analysis to assess your needs, followed by a FREE wireframe to visualize the solution. After that, we’ll provide you with the most accurate pricing and the best solution tailored to your business. Stay tuned—we’ll be in touch shortly!