Hiep Nguyen

October 15, 2024

•

25 min read

Integrating AWS Lambda functions into your Retool inventory management system can significantly enhance its efficiency and scalability. This blog provides a comprehensive guide on leveraging Lambda functions to automate tasks, process events, and streamline workflows within your application. By following this guide, you'll learn how to set up Lambda functions, manage permissions, and implement best practices to ensure seamless operation and improved performance of your inventory management system.

We introduced the concept of Event-Driven Architecture (EDA) in the first post of this series. In an event-driven system, events are constantly being generated and received, and we need a way to handle those events properly. Often, this involves running specific logic based on the event.

In a serverless environment, this is where Function-as-a-Service (FaaS) comes in. Instead of managing entire servers, the service provider gives us just the function. This means we only focus on the function's logic and not on the infrastructure behind it.

AWS Lambda is the FaaS offering from Amazon Web Services. It allows us to write code, and AWS handles the rest, such as scaling, high availability, and fault tolerance. You still have some flexibility to adjust settings, and you only pay for the actual time your code is running.

In this blog, we’ll explore how AWS Lambda works while intertwining the restocking use case of RetoolerStock.

An IAM resource policy governs who or which services can invoke the Lambda function. In simpler terms, it acts as a gatekeeper, defining which AWS services are allowed to trigger the function. This is particularly important when dealing with event-driven systems, like the one in RetoolerStock.

RetoolerStock Scenario: DynamoDB Triggers Lambda

In RetoolerStock, DynamoDB stores the inventory data, and DynamoDB Streams capture any changes made to the stock levels (e.g., when an item is sold or received). These changes need to trigger a Lambda function that automatically checks stock levels and decides whether a restocking workflow should be initiated.

For this trigger to happen, the Lambda function must have an IAM resource policy that allows DynamoDB Streams to invoke it. Without this permission, even though DynamoDB has updates, it won’t be able to "call" the Lambda function to process those updates.

Example Policy for DynamoDB Triggering Lambda:

The IAM resource policy might look something like this, where DynamoDB is granted permission to invoke the Lambda function:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "dynamodb.amazonaws.com"

},

"Action": "lambda:InvokeFunction",

"Resource": "arn:aws:lambda:region:account-id:function:function-name"

}

]

}

This policy ensures that DynamoDB can trigger the Lambda function whenever stock levels change in the database, enabling real-time decision-making for restocking.

Other AWS Services Triggering Lambda in RetoolerStock

Besides DynamoDB Streams, other AWS services in RetoolerStock also trigger Lambda functions. For example:

In each of these cases, the Lambda function needs a resource policy that allows these services (S3, EventBridge) to invoke it. Each service must be explicitly granted permission to trigger the Lambda function, ensuring that only authorized services can interact with it.

The IAM execution role determines what actions the Lambda function is allowed to perform when it runs. In the RetoolerStock application, Lambda functions need to interact with various AWS services to perform their tasks, such as reading data from DynamoDB, sending alerts via SNS.

RetoolerStock Scenario: Checking Stock Levels and Sending Alerts

Let’s walk through an example of how the IAM execution role comes into play in RetoolerStock.

Example IAM Execution Role:

Here’s an example of what the execution role might look like for this workflow:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"dynamodb:GetItem",

"dynamodb:Scan"

],

"Resource": "arn:aws:dynamodb:region:account-id:table/InventoryTable"

},

{

"Effect": "Allow",

"Action": "sns:Publish",

"Resource": "arn:aws:sns:region:account-id:LowStockAlerts"

}

]

}

Learn more about IAM policy and Trust policy.

Learn more about Least Privilege Principle.

AWS Lambda can be triggered using different ways (invocation models) depending on the use case and the type of interaction we need.

Synchronous invocation is when a service or user waits for the Lambda function to complete and return a result before continuing. This model is ideal for real-time operations where immediate feedback is required.

RetoolerStock Use Case: Manually Checking and Updating Stock Levels

In RetoolerStock, warehouse managers may manually check and update stock levels through Retool dashboard. The Retool application has a resource to integrated with API Gateway - which basically, a REST resource, which serves as the entry point for user-initiated requests.

Asynchronous invocation allows Lambda functions to handle tasks in the background, where the initiating service does not wait for the function to complete. This is useful for tasks that don’t require an immediate response, like processing data or sending notifications.

RetoolerStock Use Case: Low-Stock Alerts and Restocking Workflow

In RetoolerStock, several tasks happen asynchronously to ensure efficient inventory management without blocking other processes:

In both of these examples, Lambda is invoked asynchronously, meaning the system doesn’t need to wait for the function to finish before moving on to the next task. Here’s a more detailed look at how this works:

An event source mapping is a resource within AWS Lambda that reads items from specified event sources and invokes a Lambda function with batches of records.

In the Asynchronous Invocation model, the initiating service (such as S3, SNS, or EventBridge) sends an event directly to the Lambda function. Lambda immediately starts processing the event without waiting for the consumer to explicitly request it. This works well for background tasks where real-time processing isn’t critical. Once the event is sent, AWS takes care of executing the Lambda function, and the event doesn’t persist in any long-term stream after being handled.

By contrast, in the Polling Invocation model, Lambda doesn’t automatically receive events. Instead, events are placed into a stream or queue, such as DynamoDB Streams, SQS (Simple Queue Service), or Kinesis Streams. Lambda continuously polls this stream to check for new events. The key difference here is that the consumer (Lambda function) decides when to process the event by polling the stream, rather than receiving the event directly upon its creation.

Lambda functions, invoked asynchronously in polling or async models, let you handle execution outcomes. You can set destinations based on success or failure. When the function succeeds, trigger actions like logging. When it fails, send the result to a dead-letter queue (DLQ), retry, or analyze it later. This setup helps manage errors and ensures smoother handling of failed tasks.

Writing a Lambda function is straightforward. Lambda functions can be authored in various supported languages, or you can use a custom runtime to write them in an unsupported language.

In a Lambda function, you need to define a handler method. This method receives an event—which serves as the main parameter, carrying data from other services (the structure of the event depends on the source)—and an optional context object that contains information about the current execution environment.

Each function should focus on doing one thing to improve reusability and composability for more complex use cases. Additionally, the function should only include what it needs to reduce cold start times.

AWS Lambda enables developers to efficiently reuse code and manage dependencies by leveraging Lambda Layers.

For example, in a Node.js application, we can package all the required dependencies into a layer. Here’s how it works:

node_modules folder) for your Node.js project.node_modules folder and any other shared code you'd like to reuse across Lambda functions.This approach ensures that you can reuse common code or dependencies across multiple functions without needing to include them in every Lambda deployment package.

Read more about Node.js Lambda Function Layers here: https://docs.aws.amazon.com/lambda/latest/dg/nodejs-layers.html

Before diving into writing Lambda functions, it's essential to understand that with every invocation, AWS Lambda creates a new instance of the function - new execution environment. This allows for multiple functions to process concurrently. However, this also means that each invocation is stateless and must be treated as new.

Every Lambda function goes through three stages in its lifecycle:

For each invocation, the Lambda function initializes, runs the code, and shuts down. Initially, this process may seem inefficient, and to some extent, it is. However, notice that Lambda waits a short time before shutting down the function. If another invocation occurs during this period, the existing instance is reused, skipping the initialization phase. This is known as a warm start, which results in lower latency since no initialization is required. In contrast, a cold start occurs when a new instance is created, including the initialization phase.

To optimize performance and reduce cold starts, you can:

It's important to note that AWS only starts charging once your code begins to run, meaning charges apply from the beginning of the invoke phase, not during the initialization phase. Optimizing the initialization phase helps reduce latency, but does not impact billing.

To minimize costs, it's essential to balance the allocated memory and the function's timeout setting for optimal performance at the lowest acceptable cost.

Concurrency determines how many instances of your Lambda function can run at the same time, which directly impacts its performance and ability to scale. When a function is invoked, Lambda creates an instance to handle the event. If another request comes in while the first is still running, Lambda spins up a new instance. The total number of instances running simultaneously is the function’s concurrency.

Types of Concurrency

Read more about AWS CloudWatch

Learn more about AWS Lambda through 20 videos of ServerlessLand: https://serverlessland.com/content/service/lambda/guides/aws-lambda-fundamentals/what-is-aws-lambda

Learn more about: Tags, alias

Ready to bring your dashboard vision to life? Contact Retoolers today, and let us help you create a powerful, intuitive dashboard that meets your exact requirements.

Looking to supercharge your operations? We’re masters in Retool and experts at building internal tools, dashboards, admin panels, and portals that scale with your business. Let’s turn your ideas into powerful tools that drive real impact.

Curious how we’ve done it for others? Explore our Use Cases to see real-world examples, or check out Our Work to discover how we’ve helped teams like yours streamline operations and unlock growth.

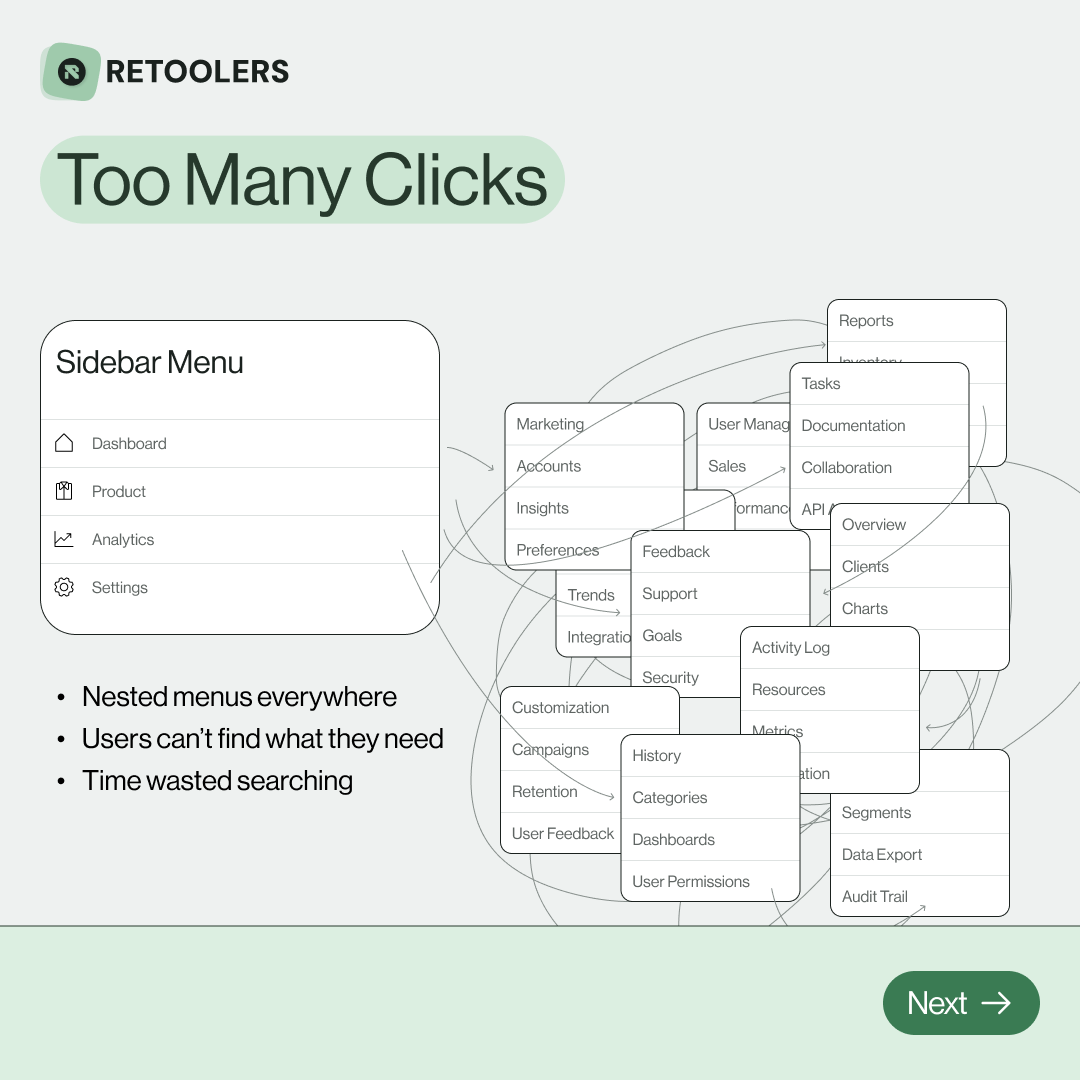

🔎 Internal tools often fail because of one simple thing: Navigation.

Too many clicks, buried menus, lost users.

We broke it down in this 4-slide carousel:

1️⃣ The problem (too many clicks)

2️⃣ The fix (clear navigation structure)

3️⃣ The Retool advantage (drag-and-drop layouts)

4️⃣ The impact (happier teams)

💡 With Retool, you can design internal tools that are easy to use, fast to build, and simple to maintain.

👉 Swipe through the carousel and see how better UX = better productivity.

📞 Ready to streamline your tools? Book a call with us at Retoolers.

🚀From idea → app in minutesBuilding internal tools used to take weeks.

Now, with AI App Generation in Retool, you can describe what you want in plain English and let AI do the heavy lifting.

At Retoolers, we help teams move faster by combining AI + Retool to create tools that actually fit their workflows.

👉 Check out our blog for the full breakdown: https://lnkd.in/gMAiqy9F

As part of our process, you’ll receive a FREE business analysis to assess your needs, followed by a FREE wireframe to visualize the solution. After that, we’ll provide you with the most accurate pricing and the best solution tailored to your business. Stay tuned—we’ll be in touch shortly!